Chatbot example with OpenAI, Next.js and Literal AI

The goal of this tutorial is to set up a simple chatbot and give it superpowers using Literal AI.

We will explore the following functionalities of the Literal AI platform:

- customizing the experience with a System Prompt that can be managed separately from the code

- logging user conversations as Threads

- allowing our users to Score the chatbot’s responses

For this tutorial, you will need the following:

- An OpenAI API key (which you can get on their website)

- A Literal AI api key (which you can get in your project’s General settings)

Setup the project

Let’s create a Next.js project with their handy CLI.

This will prompt some question on how the project will be initialized. For this guide we will use Typescript, Tailwind and the Router.

Now we can install the extra dependencies.

openaiis the official package to communicate with OpenAIaiis the Next.js framework with helpers to integrate OpenAI to our app@literalai/clientis a tool that will help us customize and monitor our app

And that’s it! You can now create a .env file at the root of the Next.js app, and copy your API keys inside:

Chat with OpenAI

Our first task is to create a simple Chat page that takes messages from the user, sends them to gpt-3 and then streams gpt-3’s response to the user.

For this we will need a server route at /api/chat to communicate with OpenAI:

On the frontend we will use Vercel’s ai package which provides simple to use affordances for our use case.

Now you can launch the app:

And you should see a working chatbot at http://localhost:3000/.

A simple chatbot

As such, this page just pipes your messages directly to ChatGPT. Let us customize the experience and make it observable thanks to Literal AI.

Integrate Literal AI

1. Setting up

Instantiate a Literal Client in your API route. By default, it will use the LITERAL_API_KEY environment variable.

2. Adding a system prompt

Adding a system prompt

ℹ️ Literal AI allows you to decouple your prompts for your application code. This has various benefits in terms of code readability, but also allows your AI experts to tweak prompts and settings without having to redeploy your application.

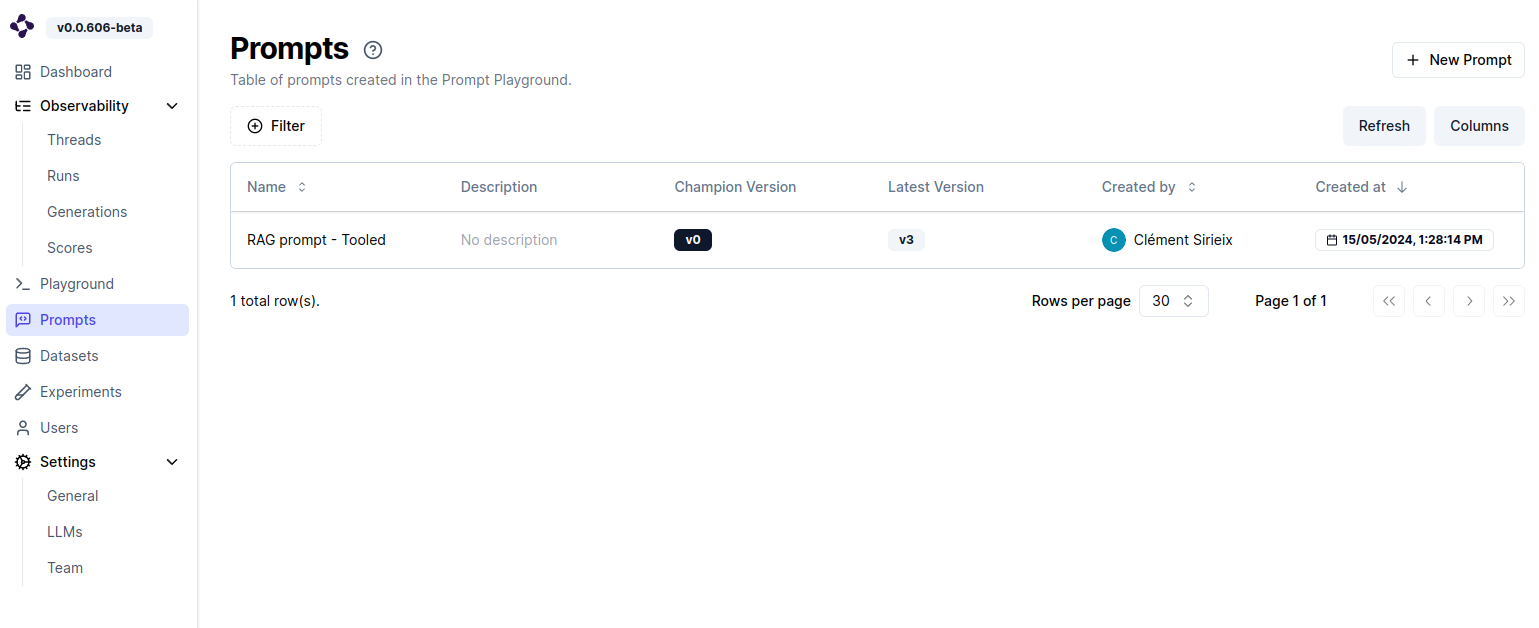

Navigate to the Prompts page on Literal AI, and create a new prompt on Literal AI by clicking on “New Prompt”.

For this example we will use a simple prompt to specialize our chatbot on Wildlife.

- Add a Message by clicking on the “+ Message” button

Creating a message

- Enter the following as your System Prompt:

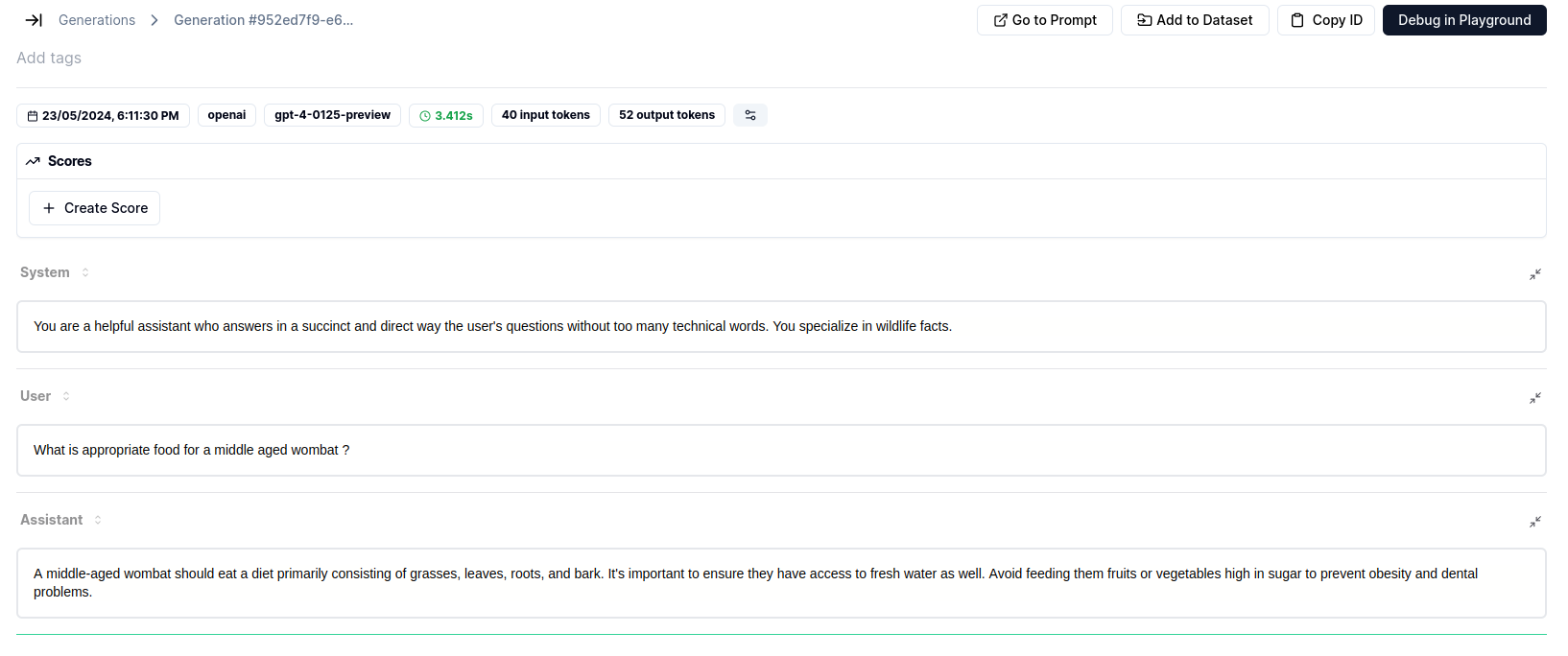

You are a helpful assistant who answers in a succinct and direct way the user’s questions without too many technical words. You specialize in wildlife facts.

- Save it and give it the following name: “Simple Chatbot”. Be sure to give it that exact name as it will be used later to fetch the prompt from our application.

Once this is done, edit your API route to insert the prompt & settings with our user’s messages:

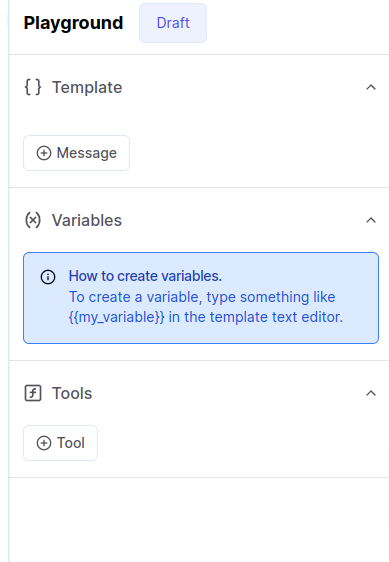

A wildlife chatbot

3. Threads

At its core, Literal AI allows you to observe and monitor the interactions between your users and your chatbot. To achieve this, we are going to add some code that logs user interactions on the platform.

The simplest way to achieve this is to use the Literal SDK’s instrumentation API.

With that simple operation, all the settings and content of OpenAI calls will be logged on Literal AI.

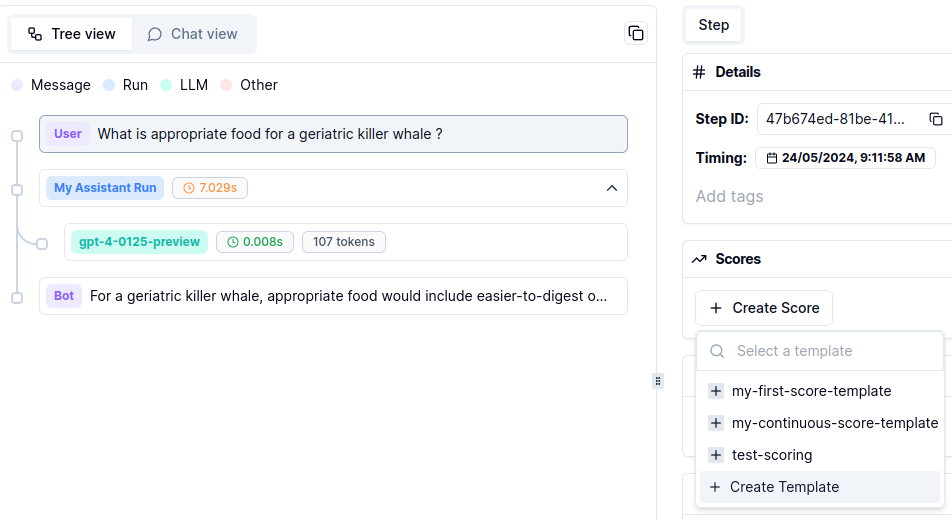

A LLM response, logged on Literal AI

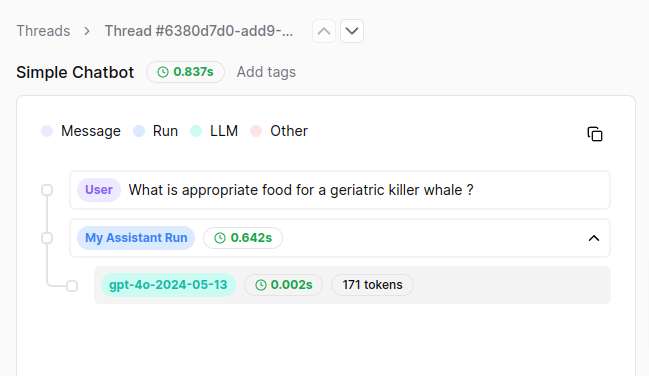

Storing all your Generations as one-shots is impractical though. Wouldn’t it be more convenient if we could group all the messages from a single conversation into one Thread?

First, we need to create a unique ID for the conversation, and another one for each subsequent exchange between the user and the LLM. We will use crypto.randomUUID for this as it will generate an ID that is suitable to use on Literal.

Then we use these IDs in our API route to create a new Thread (that will be global to the conversation) and a new Run (that will change with each interaction).

To achieve this we use Literal AI SDK’s wrappers system.

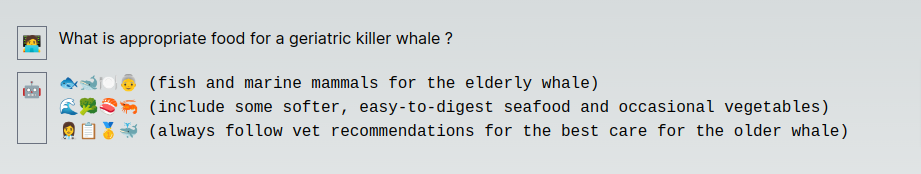

Now the following exchange in our chat application:

Monitored chatbot

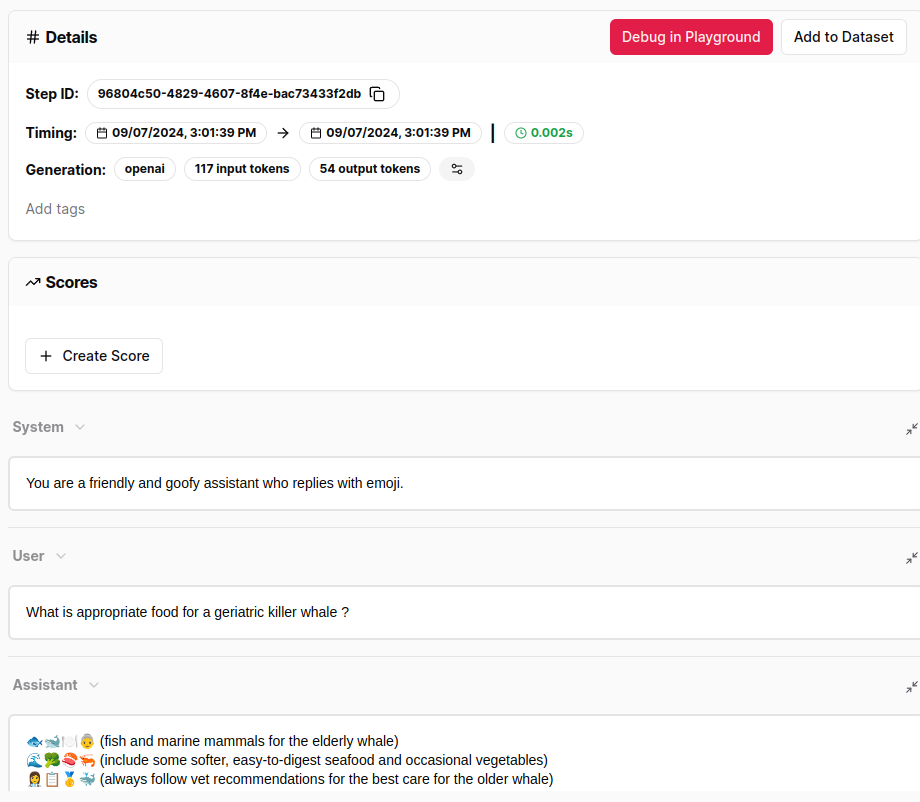

Will be traced like so on Literal AI. As you can see we can easily follow the flow of the conversation, and the “Run” and “Generation” steps allow us to deep dive into the technical aspects of each LLM call and response.

Conversation tree

Details of the LLM response

4. Human evaluation

The last aspect we’ll approach today is that of human evaluation. By allowing our users to rate each interaction with the chatbot (using a 👍 button), we can easily construct a dataset of known good interactions, which can be used later on to evaluate prompt variations, evaluate different models, or even further fine-tune an existing model.

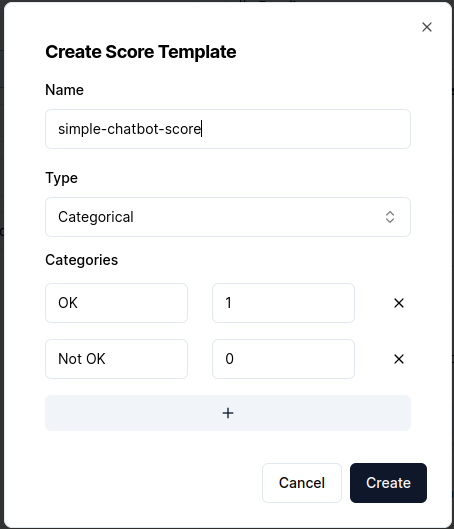

To accomplish this, we will first need to create a Score Template on Literal AI.

- Navigate to any of your existing threads on Literal

- Click on any Step of your Thread, and on the right-hand panel click Create Score then Create Template

Creating a score template

- Name your score template

simple-chatbot-scoreand give it the following settings:

Simple Chatbot Score

Now let us think about the plumbing we will need to assemble this feature: to be able to vote on a chatbot’s message, we will need to know its Step ID on Literal AI. Right now we have two sets of IDs :

- the

runIdthat we generate before each message is sent - the

message.idthat Vercel AI SDK generates after each message is sent

So we will need to do some mapping between the two. We also want to keep track of the messages that have already been evaluated by the user.

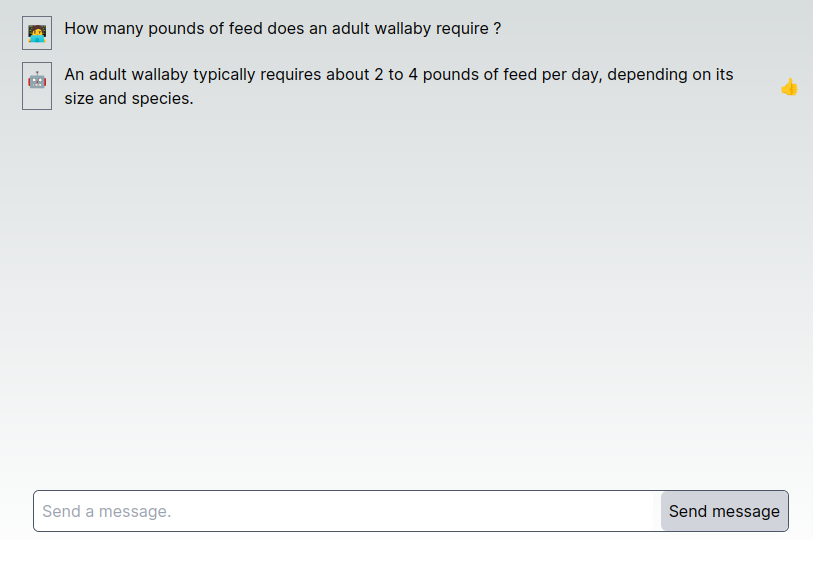

On its own this doesn’t accomplish much, so let us add a 👍 button next to all the messages from the assistant for which we have Step IDs:

Here’s what our application looks like now:

Our simple chatbot, now with thumbs up

To gather the user’s feedback and send it to Literal, we have to create a simple API route that takes as its input a Step ID, and a vote value (1 for 👍, 0 for 👎).

Now we just need to plug the 👍 button to our new API route:

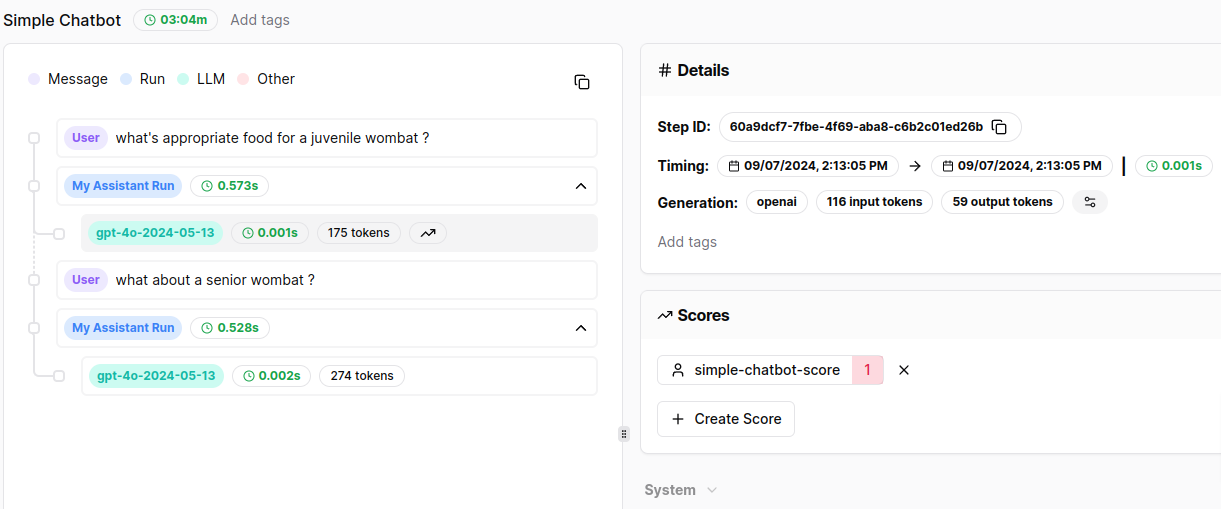

Now you can upvote messages in your conversation, and if you navigate to the corresponding thread in Literal AI you can see that the Assistant Run has been scored:

Step with score

Conclusion

Congratulations on building your own monitored chatbot! As you can see, one of the priorities of Literal AI is to make it easy to integrate with your existing codebase, while providing powerful tools to monitor and improve your AI applications. Here is a rundown of the features we have implemented in this tutorial, and how they will affect the lifecycle of your chatbot:

- System Prompt: By decoupling the prompt from the code, you can iterate on system prompts and LLM settings, without leaving your web browser - and without having to redeploy your application!

- Monitoring: By logging each conversation in a structured way, you can easily replay all the interactions between your users and your chatbot.

- Human Evaluation: By allowing your users to score the chatbot’s responses, you can now passively gather a dataset of known good interactions. Because each of these interactions is linked to a specific Prompt version, you can easily evaluate the impact of prompt variations and LLM settings on the chatbot’s performance.

To dive deeper into evaluation, check out our other Guides & Tutorials, or learn more about what Literal AI can do for you by visiting our website.

Was this page helpful?